Users can start Wget and log off, leaving the program unattended. Wget is non-interactive in the sense that, once started, it does not require user interaction and does not need to control a TTY, being able to log its progress to a separate file for later inspection.

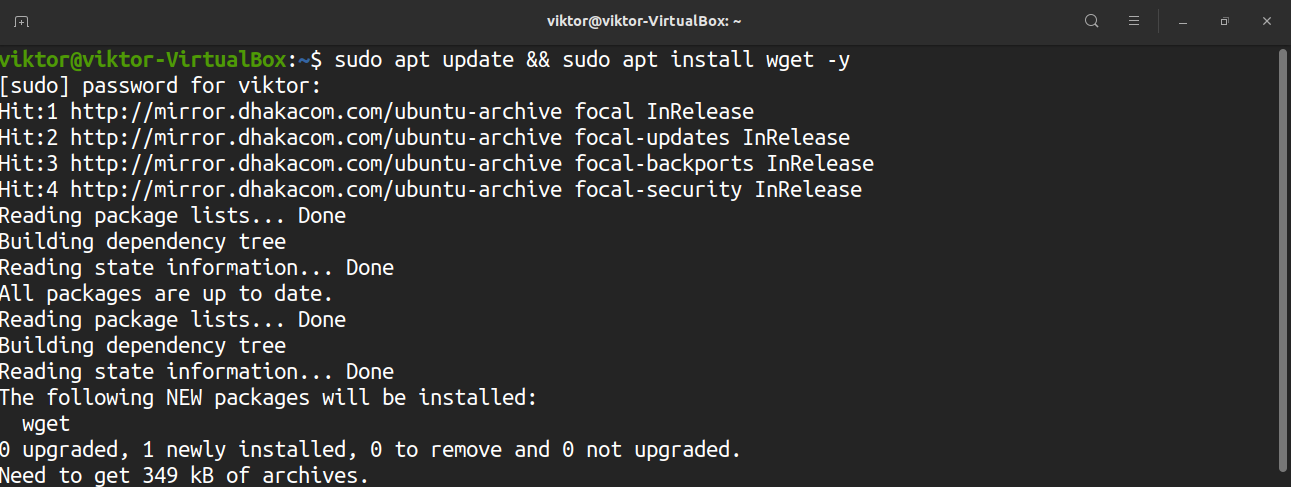

WGET UBUNTU SOFTWARE

On the other hand, Wget doesn't require special server-side software for this task. This allows easy mirroring of HTTP and FTP sites, but is considered inefficient and more error-prone when compared to programs designed for mirroring from the ground up, such as rsync.

WGET UBUNTU DOWNLOAD

When downloading recursively over either HTTP or FTP, Wget can be instructed to inspect the timestamps of local and remote files, and download only the remote files newer than the corresponding local ones. Shell-like wildcards are supported when the download of FTP URLs is requested. Recursive download works with FTP as well, where Wget issues the LIST command to find which additional files to download, repeating this process for directories and files under the one specified in the top URL. When performing this kind of automatic mirroring of web sites, Wget supports the Robots Exclusion Standard (unless the option -e robots=off is used).

WGET UBUNTU OFFLINE

Links in downloaded HTML pages can be adjusted to point to locally downloaded material for offline viewing. This "recursive download" enables partial or complete mirroring of web sites via HTTP. The downloaded pages are saved in a directory structure resembling that on the remote server. Wget can optionally work like a web crawler by extracting resources linked from HTML pages and downloading them in sequence, repeating the process recursively until all the pages have been downloaded or a maximum recursion depth specified by the user has been reached. It was one of the first clients to make use of the then-new Range HTTP header to support this feature. If a download does not complete due to a network problem, Wget will automatically try to continue the download from where it left off, and repeat this until the whole file has been retrieved. Wget has been designed for robustness over slow or unstable network connections. diplomatic cables and 500,000 Army reports that came to be known as the Iraq War logs and Afghan War logs sent to WikiLeaks. In 2010, Chelsea Manning used Wget to download 250,000 U.S.

While Wget was inspired by features of some of the existing programs, it supported both HTTP and FTP and could be built using only the standard development tools found on every Unix system.Īt that time many Unix users struggled behind extremely slow university and dial-up Internet connections, leading to a growing need for a downloading agent that could deal with transient network failures without assistance from the human operator. Existing programs either supported FTP (such as NcFTP and dl) or were written in Perl, which was not yet ubiquitous. No single program could reliably use both HTTP and FTP to download files. Wget filled a gap in the inconsistent web-downloading software available in the mid-1990s. The name changed to Wget after the author became aware of an earlier Amiga program named GetURL, written by James Burton in AREXX. Wget descends from an earlier program named Geturl by the same author, the development of which commenced in late 1995. It has been used as the basis for graphical programs such as GWget for the GNOME Desktop. Since version 1.14 Wget has been able to save its output in the web archiving standard WARC format.

Wget has been ported to Microsoft Windows, macOS, OpenVMS, HP-UX, AmigaOS, MorphOS and Solaris.

WGET UBUNTU PORTABLE

Written in portable C, Wget can be easily installed on any Unix-like system. It appeared in 1996, coinciding with the boom of popularity of the Web, causing its wide use among Unix users and distribution with most major Linux distributions. Its features include recursive download, conversion of links for offline viewing of local HTML, and support for proxies. Its name derives from " World Wide Web" and " get." It supports downloading via HTTP, HTTPS, and FTP. GNU Wget (or just Wget, formerly Geturl, also written as its package name, wget) is a computer program that retrieves content from web servers.

0 kommentar(er)

0 kommentar(er)